| Type: | Package |

| Title: | Assessment of Regression Models Performance |

| Version: | 0.15.3 |

| Maintainer: | Daniel Lüdecke <officialeasystats@gmail.com> |

| Description: | Utilities for computing measures to assess model quality, which are not directly provided by R's 'base' or 'stats' packages. These include e.g. measures like r-squared, intraclass correlation coefficient (Nakagawa, Johnson & Schielzeth (2017) <doi:10.1098/rsif.2017.0213>), root mean squared error or functions to check models for overdispersion, singularity or zero-inflation and more. Functions apply to a large variety of regression models, including generalized linear models, mixed effects models and Bayesian models. References: Lüdecke et al. (2021) <doi:10.21105/joss.03139>. |

| License: | GPL-3 |

| URL: | https://easystats.github.io/performance/ |

| BugReports: | https://github.com/easystats/performance/issues |

| Depends: | R (≥ 4.0) |

| Imports: | bayestestR (≥ 0.17.0), insight (≥ 1.4.2), datawizard (≥ 1.3.0), stats, methods, utils |

| Suggests: | AER, afex, BayesFactor, bayesplot, betareg, bigutilsr, blavaan, boot, brms, car, carData, CompQuadForm, correlation (≥ 0.8.8), cplm, curl, dagitty, dbscan, DHARMa (≥ 0.4.7), discovr, estimatr, fixest, flextable, forecast, ftExtra, gamm4, ggdag, glmmTMB (≥ 1.1.12), GPArotation, graphics, Hmisc, httr2, ICS, ICSOutlier, ISLR, ivreg, lavaan, lme4, lmtest, loo, MASS, Matrix, mclogit, mclust, metadat, metafor, mgcv, mlogit, modelbased (≥ 0.12.0), multimode, nestedLogit, nlme, nnet, nonnest2, ordinal, parallel, parameters (≥ 0.28.0), patchwork, pscl, psych, psychTools, quantreg, qqplotr (≥ 0.0.6), randomForest, RcppEigen, reformulas, rempsyc, rmarkdown, rstanarm, rstantools, sandwich, see (≥ 0.9.0), survey, survival, testthat (≥ 3.2.1), tweedie, VGAM, withr (≥ 3.0.0) |

| Encoding: | UTF-8 |

| Language: | en-US |

| RoxygenNote: | 7.3.3 |

| Config/testthat/edition: | 3 |

| Config/testthat/parallel: | true |

| Config/Needs/website: | rstudio/bslib, r-lib/pkgdown, easystats/easystatstemplate |

| Config/rcmdcheck/ignore-inconsequential-notes: | true |

| NeedsCompilation: | no |

| Packaged: | 2025-12-01 11:05:02 UTC; mail |

| Author: | Daniel Lüdecke |

| Repository: | CRAN |

| Date/Publication: | 2025-12-01 11:50:02 UTC |

performance: An R Package for Assessment, Comparison and Testing of Statistical Models

Description

A crucial aspect when building regression models is to evaluate the quality of modelfit. It is important to investigate how well models fit to the data and which fit indices to report. Functions to create diagnostic plots or to compute fit measures do exist, however, mostly spread over different packages. There is no unique and consistent approach to assess the model quality for different kind of models.

The primary goal of the performance package is to fill this gap and to provide utilities for computing indices of model quality and goodness of fit. These include measures like r-squared (R2), root mean squared error (RMSE) or intraclass correlation coefficient (ICC), but also functions to check (mixed) models for overdispersion, zero-inflation, convergence or singularity.

References: Lüdecke et al. (2021) doi:10.21105/joss.03139

Details

performance-package

Author(s)

Maintainer: Daniel Lüdecke officialeasystats@gmail.com (ORCID)

Authors:

Dominique Makowski dom.makowski@gmail.com (ORCID) [contributor]

Mattan S. Ben-Shachar matanshm@post.bgu.ac.il (ORCID) [contributor]

Indrajeet Patil patilindrajeet.science@gmail.com (ORCID) [contributor]

Philip Waggoner philip.waggoner@gmail.com (ORCID) [contributor]

Brenton M. Wiernik brenton@wiernik.org (ORCID) [contributor]

Rémi Thériault remi.theriault@mail.mcgill.ca (ORCID) [contributor]

Other contributors:

Vincent Arel-Bundock vincent.arel-bundock@umontreal.ca (ORCID) [contributor]

Martin Jullum [reviewer]

gjo11 [reviewer]

Etienne Bacher etienne.bacher@protonmail.com (ORCID) [contributor]

Joseph Luchman (ORCID) [contributor]

See Also

Useful links:

Report bugs at https://github.com/easystats/performance/issues

Binned residuals for binomial logistic regression

Description

Check model quality of binomial logistic regression models.

Usage

binned_residuals(

model,

term = NULL,

n_bins = NULL,

show_dots = NULL,

ci = 0.95,

ci_type = "exact",

residuals = "deviance",

iterations = 1000,

verbose = TRUE,

...

)

Arguments

model |

A |

term |

Name of independent variable from |

n_bins |

Numeric, the number of bins to divide the data. If

|

show_dots |

Logical, if |

ci |

Numeric, the confidence level for the error bounds. |

ci_type |

Character, the type of error bounds to calculate. Can be

|

residuals |

Character, the type of residuals to calculate. Can be

|

iterations |

Integer, the number of iterations to use for the

bootstrap method. Only used if |

verbose |

Toggle warnings and messages. |

... |

Currently not used. |

Details

Binned residual plots are achieved by "dividing the data into categories (bins) based on their fitted values, and then plotting the average residual versus the average fitted value for each bin." (Gelman, Hill 2007: 97). If the model were true, one would expect about 95% of the residuals to fall inside the error bounds.

If term is not NULL, one can compare the residuals in

relation to a specific model predictor. This may be helpful to check if a

term would fit better when transformed, e.g. a rising and falling pattern

of residuals along the x-axis is a signal to consider taking the logarithm

of the predictor (cf. Gelman and Hill 2007, pp. 97-98).

Value

A data frame representing the data that is mapped in the accompanying plot. In case all residuals are inside the error bounds, points are black. If some of the residuals are outside the error bounds (indicated by the grey-shaded area), blue points indicate residuals that are OK, while red points indicate model under- or over-fitting for the relevant range of estimated probabilities.

Note

binned_residuals() returns a data frame, however, the print()

method only returns a short summary of the result. The data frame itself

is used for plotting. The plot() method, in turn, creates a ggplot-object.

References

Gelman, A., and Hill, J. (2007). Data analysis using regression and multilevel/hierarchical models. Cambridge; New York: Cambridge University Press.

Examples

model <- glm(vs ~ wt + mpg, data = mtcars, family = "binomial")

result <- binned_residuals(model)

result

# look at the data frame

as.data.frame(result)

# plot

plot(result, show_dots = TRUE)

Check model for independence of residuals.

Description

Check model for independence of residuals, i.e. for autocorrelation of error terms.

Usage

check_autocorrelation(x, ...)

## Default S3 method:

check_autocorrelation(x, nsim = 1000, ...)

## S3 method for class 'performance_simres'

check_autocorrelation(x, time = NULL, ...)

Arguments

x |

A model object, or an object returned by |

... |

Currently not used for models. For simulated residuals, arguments are

passed to |

nsim |

Number of simulations for the Durbin-Watson-Test. |

time |

A vector with time values to specify the temporal order of the data.

Only used if |

Details

Performs a Durbin-Watson-Test to check for autocorrelated residuals. In case of autocorrelation, robust standard errors return more accurate results for the estimates, or maybe a mixed model with error term for the cluster groups should be used.

Value

Invisibly returns the p-value of the test statistics. A p-value < 0.05 indicates autocorrelated residuals.

See Also

Other functions to check model assumptions and and assess model quality:

check_collinearity(),

check_convergence(),

check_heteroscedasticity(),

check_homogeneity(),

check_model(),

check_outliers(),

check_overdispersion(),

check_predictions(),

check_singularity(),

check_zeroinflation()

Examples

m <- lm(mpg ~ wt + cyl + gear + disp, data = mtcars)

check_autocorrelation(m)

Check suitability of data for clustering

Description

This checks whether the data is appropriate for clustering using the Hopkins'

H statistic of given data. If the value of Hopkins statistic is close to 0

(below 0.5), then we can reject the null hypothesis and conclude that the

dataset is significantly clusterable. A value for H lower than 0.25 indicates

a clustering tendency at the 90% confidence level. The visual assessment of

cluster tendency (VAT) approach (Bezdek and Hathaway, 2002) consists in

investigating the heatmap of the ordered dissimilarity matrix. Following

this, one can potentially detect the clustering tendency by counting the

number of square shaped blocks along the diagonal.

Usage

check_clusterstructure(x, standardize = TRUE, distance = "euclidean", ...)

Arguments

x |

A data frame. |

standardize |

Standardize the data frame before clustering (default). |

distance |

Distance method used. Other methods than "euclidean"

(default) are exploratory in the context of clustering tendency. See

|

... |

Arguments passed to or from other methods. |

Value

The H statistic (numeric)

References

Lawson, R. G., & Jurs, P. C. (1990). New index for clustering tendency and its application to chemical problems. Journal of chemical information and computer sciences, 30(1), 36-41.

Bezdek, J. C., & Hathaway, R. J. (2002, May). VAT: A tool for visual assessment of (cluster) tendency. In Proceedings of the 2002 International Joint Conference on Neural Networks. IJCNN02 (3), 2225-2230. IEEE.

See Also

check_kmo(), check_sphericity_bartlett() and

check_factorstructure().

Examples

library(performance)

check_clusterstructure(iris[, 1:4])

plot(check_clusterstructure(iris[, 1:4]))

Check for multicollinearity of model terms

Description

check_collinearity() checks regression models for multicollinearity by

calculating the (generalized) variance inflation factor (VIF, Fox & Monette

1992). multicollinearity() is an alias for check_collinearity().

check_concurvity() is a wrapper around mgcv::concurvity(), and can be

considered as a collinearity check for smooth terms in GAMs. Confidence

intervals for VIF and tolerance are based on Marcoulides et al. (2019,

Appendix B).

Usage

check_collinearity(x, ...)

multicollinearity(x, ...)

## Default S3 method:

check_collinearity(x, ci = 0.95, verbose = TRUE, ...)

## S3 method for class 'glmmTMB'

check_collinearity(x, component = "all", ci = 0.95, verbose = TRUE, ...)

check_concurvity(x, ...)

Arguments

x |

A model object (that should at least respond to |

... |

Currently not used. |

ci |

Confidence Interval (CI) level for VIF and tolerance values. |

verbose |

Toggle off warnings or messages. |

component |

For models with zero-inflation component, multicollinearity

can be checked for the conditional model (count component, |

Details

check_collinearity() calculates the generalized variance inflation factor

(Fox & Monette 1992), which also returns valid results for categorical

variables. The adjusted VIF is calculated as VIF^(1/(2*<nlevels>) (Fox &

Monette 1992), which is identical to the square root of the VIF for numeric

predictors, or for categorical variables with two levels.

Value

A data frame with information about name of the model term, the

(generalized) variance inflation factor and associated confidence intervals,

the adjusted VIF, which is the factor by which the standard error is

increased due to possible correlation with other terms (inflation due to

collinearity), and tolerance values (including confidence intervals), where

tolerance = 1/vif.

Multicollinearity

Multicollinearity should not be confused with a raw strong correlation between predictors. What matters is the association between one or more predictor variables, conditional on the other variables in the model. In a nutshell, multicollinearity means that once you know the effect of one predictor, the value of knowing the other predictor is rather low. Thus, one of the predictors doesn't help much in terms of better understanding the model or predicting the outcome. As a consequence, if multicollinearity is a problem, the model seems to suggest that the predictors in question don't seems to be reliably associated with the outcome (low estimates, high standard errors), although these predictors actually are strongly associated with the outcome, i.e. indeed might have strong effect (McElreath 2020, chapter 6.1).

Multicollinearity might arise when a third, unobserved variable has a causal effect on each of the two predictors that are associated with the outcome. In such cases, the actual relationship that matters would be the association between the unobserved variable and the outcome.

Remember: "Pairwise correlations are not the problem. It is the conditional associations - not correlations - that matter." (McElreath 2020, p. 169)

Interpretation of the Variance Inflation Factor

The variance inflation factor is a measure to analyze the magnitude of multicollinearity of model terms. A VIF less than 5 indicates a low correlation of that predictor with other predictors. A value between 5 and 10 indicates a moderate correlation, while VIF values larger than 10 are a sign for high, not tolerable correlation of model predictors (James et al. 2013). The adjusted VIF column in the output indicates how much larger the standard error is due to the association with other predictors conditional on the remaining variables in the model. Note that these thresholds, although commonly used, are also criticized for being too high. Zuur et al. (2010) suggest using lower values, e.g. a VIF of 3 or larger may already no longer be considered as "low".

Multicollinearity and Interaction Terms

If interaction terms are included in a model, high VIF values are expected. This portion of multicollinearity among the component terms of an interaction is also called "inessential ill-conditioning", which leads to inflated VIF values that are typically seen for models with interaction terms (Francoeur 2013). Centering interaction terms can resolve this issue (Kim and Jung 2024).

Multicollinearity and Polynomial Terms

Polynomial transformations are considered a single term and thus VIFs are not calculated between them.

Concurvity for Smooth Terms in Generalized Additive Models

check_concurvity() is a wrapper around mgcv::concurvity(), and can be

considered as a collinearity check for smooth terms in GAMs."Concurvity

occurs when some smooth term in a model could be approximated by one or more

of the other smooth terms in the model." (see ?mgcv::concurvity).

check_concurvity() returns a column named VIF, which is the "worst"

measure. While mgcv::concurvity() range between 0 and 1, the VIF value

is 1 / (1 - worst), to make interpretation comparable to classical VIF

values, i.e. 1 indicates no problems, while higher values indicate

increasing lack of identifiability. The VIF proportion column equals the

"estimate" column from mgcv::concurvity(), ranging from 0 (no problem) to

1 (total lack of identifiability).

Note

The code to compute the confidence intervals for the VIF and tolerance

values was adapted from the Appendix B from the Marcoulides et al. paper.

Thus, credits go to these authors the original algorithm. There is also

a plot()-method

implemented in the see-package.

References

Fox, J., & Monette, G. (1992). Generalized Collinearity Diagnostics. Journal of the American Statistical Association, 87(417), 178–183.

Francoeur, R. B. (2013). Could Sequential Residual Centering Resolve Low Sensitivity in Moderated Regression? Simulations and Cancer Symptom Clusters. Open Journal of Statistics, 03(06), 24-44.

James, G., Witten, D., Hastie, T., and Tibshirani, R. (eds.). (2013). An introduction to statistical learning: with applications in R. New York: Springer.

Kim, Y., & Jung, G. (2024). Understanding linear interaction analysis with causal graphs. British Journal of Mathematical and Statistical Psychology, 00, 1–14.

Marcoulides, K. M., and Raykov, T. (2019). Evaluation of Variance Inflation Factors in Regression Models Using Latent Variable Modeling Methods. Educational and Psychological Measurement, 79(5), 874–882.

McElreath, R. (2020). Statistical rethinking: A Bayesian course with examples in R and Stan. 2nd edition. Chapman and Hall/CRC.

Vanhove, J. (2021) Collinearity Isn’t a Disease That Needs Curing. Meta-Psychology, 5. doi:10.15626/MP.2021.2548

Zuur AF, Ieno EN, Elphick CS. A protocol for data exploration to avoid common statistical problems: Data exploration. Methods in Ecology and Evolution (2010) 1:3–14.

See Also

see::plot.see_check_collinearity() for options to customize the plot.

Other functions to check model assumptions and and assess model quality:

check_autocorrelation(),

check_convergence(),

check_heteroscedasticity(),

check_homogeneity(),

check_model(),

check_outliers(),

check_overdispersion(),

check_predictions(),

check_singularity(),

check_zeroinflation()

Examples

m <- lm(mpg ~ wt + cyl + gear + disp, data = mtcars)

check_collinearity(m)

# plot results

x <- check_collinearity(m)

plot(x)

Convergence test for mixed effects models

Description

check_convergence() provides an alternative convergence

test for merMod-objects.

Usage

check_convergence(x, tolerance = 0.001, ...)

Arguments

x |

A |

tolerance |

Indicates up to which value the convergence result is

accepted. The smaller |

... |

Currently not used. |

Value

TRUE if convergence is fine and FALSE if convergence

is suspicious. Additionally, the convergence value is returned as attribute.

Convergence and log-likelihood

Convergence problems typically arise when the model hasn't converged to a solution where the log-likelihood has a true maximum. This may result in unreliable and overly complex (or non-estimable) estimates and standard errors.

Inspect model convergence

lme4 performs a convergence-check (see ?lme4::convergence),

however, as as discussed here

and suggested by one of the lme4-authors in

this comment,

this check can be too strict. check_convergence() thus provides an

alternative convergence test for merMod-objects.

Resolving convergence issues

Convergence issues are not easy to diagnose. The help page on

?lme4::convergence provides most of the current advice about

how to resolve convergence issues. Another clue might be large parameter

values, e.g. estimates (on the scale of the linear predictor) larger than

10 in (non-identity link) generalized linear model might indicate

complete separation.

Complete separation can be addressed by regularization, e.g. penalized

regression or Bayesian regression with appropriate priors on the fixed effects.

Convergence versus Singularity

Note the different meaning between singularity and convergence: singularity indicates an issue with the "true" best estimate, i.e. whether the maximum likelihood estimation for the variance-covariance matrix of the random effects is positive definite or only semi-definite. Convergence is a question of whether we can assume that the numerical optimization has worked correctly or not.

See Also

Other functions to check model assumptions and and assess model quality:

check_autocorrelation(),

check_collinearity(),

check_heteroscedasticity(),

check_homogeneity(),

check_model(),

check_outliers(),

check_overdispersion(),

check_predictions(),

check_singularity(),

check_zeroinflation()

Examples

data(cbpp, package = "lme4")

set.seed(1)

cbpp$x <- rnorm(nrow(cbpp))

cbpp$x2 <- runif(nrow(cbpp))

model <- lme4::glmer(

cbind(incidence, size - incidence) ~ period + x + x2 + (1 + x | herd),

data = cbpp,

family = binomial()

)

check_convergence(model)

model <- suppressWarnings(glmmTMB::glmmTMB(

Sepal.Length ~ poly(Petal.Width, 4) * poly(Petal.Length, 4) +

(1 + poly(Petal.Width, 4) | Species),

data = iris

))

check_convergence(model)

Check correct model adjustment for identifying causal effects

Description

The purpose of check_dag() is to build, check and visualize

your model based on directed acyclic graphs (DAG). The function checks if a

model is correctly adjusted for identifying specific relationships of

variables, especially directed (maybe also "causal") effects for given

exposures on an outcome. In case of incorrect adjustments, the function

suggests the minimal required variables that should be adjusted for (sometimes

also called "controlled for"), i.e. variables that at least need to be

included in the model. Depending on the goal of the analysis, it is still

possible to add more variables to the model than just the minimally required

adjustment sets.

check_dag() is a convenient wrapper around ggdag::dagify(),

dagitty::adjustmentSets() and dagitty::adjustedNodes() to check correct

adjustment sets. It returns a dagitty object that can be visualized with

plot(). as.dag() is a small convenient function to return the

dagitty-string, which can be used for the online-tool from the

dagitty-website.

Usage

check_dag(

...,

outcome = NULL,

exposure = NULL,

adjusted = NULL,

latent = NULL,

effect = "all",

coords = NULL

)

as.dag(x, ...)

Arguments

... |

One or more formulas, which are converted into dagitty syntax.

First element may also be model object. If a model objects is provided, its

formula is used as first formula, and all independent variables will be used

for the |

outcome |

Name of the dependent variable (outcome), as character string

or as formula. Must be a valid name from the formulas provided in |

exposure |

Name of the exposure variable (as character string or

formula), for which the direct and total causal effect on the |

adjusted |

A character vector or formula with names of variables that

are adjusted for in the model, e.g. |

latent |

A character vector with names of latent variables in the model. |

effect |

Character string, indicating which effect to check. Can be

|

coords |

Coordinates of the variables when plotting the DAG. The coordinates can be provided in three different ways:

See 'Examples'. |

x |

An object of class |

Value

An object of class check_dag, which can be visualized with plot().

The returned object also inherits from class dagitty and thus can be used

with all functions from the ggdag and dagitty packages.

Specifying the DAG formulas

The formulas have following syntax:

One-directed paths: On the left-hand-side is the name of the variables where causal effects point to (direction of the arrows, in dagitty-language). On the right-hand-side are all variables where causal effects are assumed to come from. For example, the formula

Y ~ X1 + X2, paths directed from bothX1andX2toYare assumed.Bi-directed paths: Use

~~to indicate bi-directed paths. For example,Y ~~ Xindicates that the path betweenYandXis bi-directed, and the arrow points in both directions. Bi-directed paths often indicate unmeasured cause, or unmeasured confounding, of the two involved variables.

Minimally required adjustments

The function checks if the model is correctly adjusted for identifying the direct and total effects of the exposure on the outcome. If the model is correctly specified, no adjustment is needed to estimate the direct effect. If the model is not correctly specified, the function suggests the minimally required variables that should be adjusted for. The function distinguishes between direct and total effects, and checks if the model is correctly adjusted for both. If the model is cyclic, the function stops and suggests to remove cycles from the model.

Note that it sometimes could be necessary to try out different combinations

of suggested adjustments, because check_dag() can not always detect whether

at least one of several variables is required, or whether adjustments should

be done for all listed variables. It can be useful to copy the dagitty-code

(using as.dag(), which prints the dagitty-string into the console) into

the dagitty-website and play around with different adjustments.

Direct and total effects

The direct effect of an exposure on an outcome is the effect that is not mediated by any other variable in the model. The total effect is the sum of the direct and indirect effects. The function checks if the model is correctly adjusted for identifying the direct and total effects of the exposure on the outcome.

Why are DAGs important - the Table 2 fallacy

Correctly thinking about and identifying the relationships between variables is important when it comes to reporting coefficients from regression models that mutually adjust for "confounders" or include covariates. Different coefficients might have different interpretations, depending on their relationship to other variables in the model. Sometimes, a regression coefficient represents the direct effect of an exposure on an outcome, but sometimes it must be interpreted as total effect, due to the involvement of mediating effects. This problem is also called "Table 2 fallacy" (Westreich and Greenland 2013). DAG helps visualizing and thereby focusing the relationships of variables in a regression model to detect missing adjustments or over-adjustment.

References

Rohrer, J. M. (2018). Thinking clearly about correlations and causation: Graphical causal models for observational data. Advances in Methods and Practices in Psychological Science, 1(1), 27–42. doi:10.1177/2515245917745629

Westreich, D., & Greenland, S. (2013). The Table 2 Fallacy: Presenting and Interpreting Confounder and Modifier Coefficients. American Journal of Epidemiology, 177(4), 292–298. doi:10.1093/aje/kws412

Examples

# no adjustment needed

check_dag(

y ~ x + b,

outcome = "y",

exposure = "x"

)

# incorrect adjustment

dag <- check_dag(

y ~ x + b + c,

x ~ b,

outcome = "y",

exposure = "x"

)

dag

plot(dag)

# After adjusting for `b`, the model is correctly specified

dag <- check_dag(

y ~ x + b + c,

x ~ b,

outcome = "y",

exposure = "x",

adjusted = "b"

)

dag

# using formula interface for arguments "outcome", "exposure" and "adjusted"

check_dag(

y ~ x + b + c,

x ~ b,

outcome = ~y,

exposure = ~x,

adjusted = ~ b + c

)

# if not provided, "outcome" is taken from first formula, same for "exposure"

# thus, we can simplify the above expression to

check_dag(

y ~ x + b + c,

x ~ b,

adjusted = ~ b + c

)

# use specific layout for the DAG

dag <- check_dag(

score ~ exp + b + c,

exp ~ b,

outcome = "score",

exposure = "exp",

coords = list(

# x-coordinates for all nodes

x = c(score = 5, exp = 4, b = 3, c = 3),

# y-coordinates for all nodes

y = c(score = 3, exp = 3, b = 2, c = 4)

)

)

plot(dag)

# alternative way of providing the coordinates

dag <- check_dag(

score ~ exp + b + c,

exp ~ b,

outcome = "score",

exposure = "exp",

coords = list(

# x/y coordinates for each node

score = c(5, 3),

exp = c(4, 3),

b = c(3, 2),

c = c(3, 4)

)

)

plot(dag)

# Objects returned by `check_dag()` can be used with "ggdag" or "dagitty"

ggdag::ggdag_status(dag)

# Using a model object to extract information about outcome,

# exposure and adjusted variables

data(mtcars)

m <- lm(mpg ~ wt + gear + disp + cyl, data = mtcars)

dag <- check_dag(

m,

wt ~ disp + cyl,

wt ~ am

)

dag

plot(dag)

Classify the distribution of a model-family using machine learning

Description

Choosing the right distributional family for regression models is essential to get more accurate estimates and standard errors. This function may help to check a models' distributional family and see if the model-family probably should be reconsidered. Since it is difficult to exactly predict the correct model family, consider this function as somewhat experimental.

Usage

check_distribution(model)

Arguments

model |

Typically, a model (that should response to |

Details

This function uses an internal random forest model to classify the

distribution from a model-family. Currently, following distributions are

trained (i.e. results of check_distribution() may be one of the

following): "bernoulli", "beta", "beta-binomial", "binomial",

"cauchy", "chi", "exponential", "F", "gamma", "half-cauchy",

"inverse-gamma", "lognormal", "normal", "negative binomial",

"negative binomial (zero-inflated)", "pareto", "poisson",

"poisson (zero-inflated)", "tweedie", "uniform" and "weibull".

Note the similarity between certain distributions according to shape, skewness,

etc. Thus, the predicted distribution may not be perfectly representing the

distributional family of the underlying fitted model, or the response value.

There is a plot() method, which shows the probabilities of all predicted

distributions, however, only if the probability is greater than zero.

Note

This function is somewhat experimental and might be improved in future

releases. The final decision on the model-family should also be based on

theoretical aspects and other information about the data and the model.

There is also a

plot()-method

implemented in the

see-package.

Examples

data(sleepstudy, package = "lme4")

model <<- lme4::lmer(Reaction ~ Days + (Days | Subject), sleepstudy)

check_distribution(model)

plot(check_distribution(model))

Check suitability of data for Factor Analysis (FA) with Bartlett's Test of Sphericity and KMO

Description

This checks whether the data is appropriate for Factor Analysis (FA) by running the Bartlett's Test of Sphericity and the Kaiser, Meyer, Olkin (KMO) Measure of Sampling Adequacy (MSA). See details below for more information about the interpretation and meaning of each test.

Usage

check_factorstructure(x, n = NULL, ...)

check_kmo(x, n = NULL, ...)

check_sphericity_bartlett(x, n = NULL, ...)

Arguments

x |

A data frame or a correlation matrix. If the latter is passed, |

n |

If a correlation matrix was passed, the number of observations must be specified. |

... |

Arguments passed to or from other methods. |

Details

Bartlett's Test of Sphericity

Bartlett's (1951) test of sphericity tests whether a matrix (of correlations) is significantly different from an identity matrix (filled with 0). It tests whether the correlation coefficients are all 0. The test computes the probability that the correlation matrix has significant correlations among at least some of the variables in a dataset, a prerequisite for factor analysis to work.

While it is often suggested to check whether Bartlett’s test of sphericity is significant before starting with factor analysis, one needs to remember that the test is testing a pretty extreme scenario (that all correlations are non-significant). As the sample size increases, this test tends to be always significant, which makes it not particularly useful or informative in well-powered studies.

Kaiser, Meyer, Olkin (KMO)

(Measure of Sampling Adequacy (MSA) for Factor Analysis.)

Kaiser (1970) introduced a Measure of Sampling Adequacy (MSA), later modified by Kaiser and Rice (1974). The Kaiser-Meyer-Olkin (KMO) statistic, which can vary from 0 to 1, indicates the degree to which each variable in a set is predicted without error by the other variables.

A value of 0 indicates that the sum of partial correlations is large relative to the sum correlations, indicating factor analysis is likely to be inappropriate. A KMO value close to 1 indicates that the sum of partial correlations is not large relative to the sum of correlations and so factor analysis should yield distinct and reliable factors. It means that patterns of correlations are relatively compact, and so factor analysis should yield distinct and reliable factors. Values smaller than 0.5 suggest that you should either collect more data or rethink which variables to include.

Kaiser (1974) suggested that KMO > .9 were marvelous, in the .80s, meritorious, in the .70s, middling, in the .60s, mediocre, in the .50s, miserable, and less than .5, unacceptable. Hair et al. (2006) suggest accepting a value > 0.5. Values between 0.5 and 0.7 are mediocre, and values between 0.7 and 0.8 are good.

Variables with individual KMO values below 0.5 could be considered for exclusion them from the analysis (note that you would need to re-compute the KMO indices as they are dependent on the whole dataset).

Value

A list of lists of indices related to sphericity and KMO.

References

This function is a wrapper around the KMO and the cortest.bartlett()

functions in the psych package (Revelle, 2016).

Revelle, W. (2016). How To: Use the psych package for Factor Analysis and data reduction.

Bartlett, M. S. (1951). The effect of standardization on a Chi-square approximation in factor analysis. Biometrika, 38(3/4), 337-344.

Kaiser, H. F. (1970). A second generation little jiffy. Psychometrika, 35(4), 401-415.

Kaiser, H. F., & Rice, J. (1974). Little jiffy, mark IV. Educational and psychological measurement, 34(1), 111-117.

Kaiser, H. F. (1974). An index of factorial simplicity. Psychometrika, 39(1), 31-36.

See Also

Examples

library(performance)

check_factorstructure(mtcars)

# One can also pass a correlation matrix

r <- cor(mtcars)

check_factorstructure(r, n = nrow(mtcars))

Check variables for within- and/or between-group variation

Description

Checks if variables vary within and/or between levels of grouping variables.

This function can be used to infer the hierarchical Design of a given

dataset, or detect any predictors that might cause heterogeneity bias (Bell

and Jones, 2015). Use summary() on the output if you are mainly interested

if and which predictors are possibly affected by heterogeneity bias.

Usage

check_group_variation(x, ...)

## Default S3 method:

check_group_variation(x, ...)

## S3 method for class 'data.frame'

check_group_variation(

x,

select = NULL,

by = NULL,

include_by = FALSE,

numeric_as_factor = FALSE,

tolerance_numeric = 1e-04,

tolerance_factor = "crossed",

...

)

## S3 method for class 'check_group_variation'

summary(object, flatten = FALSE, ...)

Arguments

x |

A data frame or a mixed model. See details and examples. |

... |

Arguments passed to other methods |

select |

Character vector (or formula) with names of variables to select

that should be checked. If |

by |

Character vector (or formula) with the name of the variable that

indicates the group- or cluster-ID. For cross-classified or nested designs,

|

include_by |

When there is more than one grouping variable, should they be check against each other? |

numeric_as_factor |

Should numeric variables be tested as factors? |

tolerance_numeric |

The minimal percent of variation (observed icc) that is tolerated to indicate no within- or no between-effect. |

tolerance_factor |

How should a non-numeric variable be identified as varying only "within" a grouping variable? Options are:

|

object |

result from |

flatten |

Logical, if |

Details

This function attempt to identify the variability of a set of variables

(select) with respect to one or more grouping variables (by). If x is a

(mixed effect) model, the variability of the fixed effects predictors are

checked with respect to the random grouping variables.

Generally, a variable is considered to vary between groups if is correlated

with those groups, and to vary within groups if it not a constant within at

least one group.

Numeric variables

Numeric variables are partitioned via datawizard::demean() to their

within- and between-group components. Then, the variance for each of these

two component is calculated. Variables with within-group variance larger than

tolerance_numeric are labeled as within, variables with a between-group

variance larger than tolerance_numeric are labeled as between, and

variables with both variances larger than tolerance_numeric are labeled as

both.

Setting numeric_as_factor = TRUE causes numeric variables to be tested

using the following criteria.

Non-numeric variables

These variables can have one of the following three labels:

-

between - the variable is correlated with the groups, and is fixed within each group (each group has exactly one unique, constant value)

-

within - the variable is crossed with the grouping variable, such that all possible values appear within each group. The

tolerance_factorargument controls if full balance is also required. -

both - the variable is correlated with the groups, but also varies within each group but is not fully crossed (or, when

tolerance_factor = "balanced"the variable is fully crossed, but not perfectly balanced).

Additionally, the design of non-numeric variables is also checked to see if

they are nested within the groups or is they are crossed. This is

indicated by the Design column.

Heterogeneity bias

Variables that vary both within and between groups can cause a heterogeneity

bias (Bell and Jones, 2015). It is recommended to center (person-mean

centering) those variables to avoid this bias. See datawizard::demean()

for further details. Use summary() to get a short text result that

indicates if and which predictors are possibly affected by heterogeneity

bias.

Value

A data frame with Group, Variable, Variation and Design columns.

References

Bell A, Jones K. 2015. Explaining Fixed Effects: Random Effects Modeling of Time-Series Cross-Sectional and Panel Data. Political Science Research and Methods, 3(1), 133–153.

See Also

For further details, read the vignette

https://easystats.github.io/parameters/articles/demean.html and also

see documentation for datawizard::demean().

Examples

data(npk)

check_group_variation(npk, by = "block")

data(iris)

check_group_variation(iris, by = "Species")

data(ChickWeight)

check_group_variation(ChickWeight, by = "Chick")

# A subset of mlmRev::egsingle

egsingle <- data.frame(

schoolid = factor(rep(c("2020", "2820"), times = c(18, 6))),

lowinc = rep(c(TRUE, FALSE), times = c(18, 6)),

childid = factor(rep(

c("288643371", "292020281", "292020361", "295341521"),

each = 6

)),

female = rep(c(TRUE, FALSE), each = 12),

year = rep(1:6, times = 4),

math = c(

-3.068, -1.13, -0.921, 0.463, 0.021, 2.035,

-2.732, -2.097, -0.988, 0.227, 0.403, 1.623,

-2.732, -1.898, -0.921, 0.587, 1.578, 2.3,

-2.288, -2.162, -1.631, -1.555, -0.725, 0.097

)

)

result <- check_group_variation(

egsingle,

by = c("schoolid", "childid"),

include_by = TRUE

)

result

summary(result)

data(sleepstudy, package = "lme4")

check_group_variation(sleepstudy, select = "Days", by = "Subject")

# Or

mod <- lme4::lmer(Reaction ~ Days + (Days | Subject), data = sleepstudy)

result <- check_group_variation(mod)

result

summary(result)

Check model predictor for heterogeneity bias (Deprecated)

Description

check_heterogeneity_bias() checks if model predictors or variables may

cause a heterogeneity bias, i.e. if variables have any within-group variance

(Bell and Jones, 2015).

We recommend using check_group_variation() instead, for a more detailed

and flexible examination of group-wise variability.

Usage

check_heterogeneity_bias(x, select = NULL, by = NULL, nested = FALSE)

Arguments

x |

A data frame or a mixed model object. |

select |

Character vector (or formula) with names of variables to select

that should be checked. If |

by |

Character vector (or formula) with the name of the variable that

indicates the group- or cluster-ID. For cross-classified or nested designs,

For nested designs,

See also section De-meaning for cross-classified designs and

De-meaning for nested designs in |

nested |

Logical, if |

References

Bell A, Jones K. 2015. Explaining Fixed Effects: Random Effects Modeling of Time-Series Cross-Sectional and Panel Data. Political Science Research and Methods, 3(1), 133–153.

See Also

For further details, read the vignette

https://easystats.github.io/parameters/articles/demean.html and also

see documentation for datawizard::demean().

For a more detailed and flexible examination of group-wise variability, see

check_group_variation().

Examples

data(iris)

iris$ID <- sample(1:4, nrow(iris), replace = TRUE) # fake-ID

check_heterogeneity_bias(iris, select = c("Sepal.Length", "Petal.Length"), by = "ID")

Check model for (non-)constant error variance

Description

Significance testing for linear regression models assumes that the model errors (or residuals) have constant variance. If this assumption is violated the p-values from the model are no longer reliable.

Usage

check_heteroscedasticity(x, ...)

check_heteroskedasticity(x, ...)

Arguments

x |

A model object. |

... |

Currently not used. |

Details

This test of the hypothesis of (non-)constant error is also called Breusch-Pagan test (1979).

Value

The p-value of the test statistics. A p-value < 0.05 indicates a non-constant variance (heteroskedasticity).

Note

There is also a plot()-method

implemented in the see-package.

References

Breusch, T. S., and Pagan, A. R. (1979) A simple test for heteroscedasticity and random coefficient variation. Econometrica 47, 1287-1294.

See Also

Other functions to check model assumptions and and assess model quality:

check_autocorrelation(),

check_collinearity(),

check_convergence(),

check_homogeneity(),

check_model(),

check_outliers(),

check_overdispersion(),

check_predictions(),

check_singularity(),

check_zeroinflation()

Examples

m <<- lm(mpg ~ wt + cyl + gear + disp, data = mtcars)

check_heteroscedasticity(m)

# plot results

x <- check_heteroscedasticity(m)

plot(x)

Check model for homogeneity of variances

Description

Check model for homogeneity of variances between groups described by independent variables in a model.

Usage

check_homogeneity(x, method = "bartlett", ...)

## S3 method for class 'afex_aov'

check_homogeneity(x, method = "levene", ...)

Arguments

x |

A linear model or an ANOVA object. |

method |

Name of the method (underlying test) that should be performed

to check the homogeneity of variances. May either be |

... |

Arguments passed down to |

Value

Invisibly returns the p-value of the test statistics. A p-value < 0.05 indicates a significant difference in the variance between the groups.

Note

There is also a plot()-method

implemented in the see-package.

See Also

Other functions to check model assumptions and and assess model quality:

check_autocorrelation(),

check_collinearity(),

check_convergence(),

check_heteroscedasticity(),

check_model(),

check_outliers(),

check_overdispersion(),

check_predictions(),

check_singularity(),

check_zeroinflation()

Examples

model <<- lm(len ~ supp + dose, data = ToothGrowth)

check_homogeneity(model)

# plot results

result <- check_homogeneity(model)

plot(result)

Describe Properties of Item Scales

Description

Compute various measures of internal consistencies

applied to (sub)scales, which items were extracted using

parameters::principal_components() or parameters::factor_analysis().

Usage

check_itemscale(x, factor_index = NULL, verbose = TRUE)

Arguments

x |

An object of class |

factor_index |

If |

verbose |

Toggle warnings and messages. If |

Details

check_itemscale() calculates various measures of internal consistencies,

such as Cronbach's alpha, item difficulty or discrimination etc. on subscales

which were built from several items. Subscales are retrieved from the results

of parameters::principal_components() or parameters::factor_analysis(),

i.e. based on how many components were extracted from the PCA, respectively

how many factors were extracted from the factor analysis. check_itemscale()

retrieves those variables that belong to a component and calculates the above

mentioned measures.

Value

A list of data frames, with related measures of internal consistencies of each subscale.

Note

-

Item difficulty should range between 0.2 and 0.8. Ideal value is

p+(1-p)/2(which mostly is between 0.5 and 0.8). Seeitem_difficulty()for details. For item discrimination, also known as corrected item-total correlations, acceptable values are 0.20 or higher; the closer to 1.00 the better. See

item_discrimination()for more details. If an item discrimination is negative, the corresponding item probably need to be reverse-coded (which can be done withdatawizard::reverse()).In case the total Cronbach's alpha value is below the acceptable cut-off of 0.7 (mostly if an index has few items), the mean inter-item-correlation is an alternative measure to indicate acceptability. Satisfactory range lies between 0.2 and 0.4. See also

item_intercor().

References

Briggs SR, Cheek JM (1986) The role of factor analysis in the development and evaluation of personality scales. Journal of Personality, 54(1), 106-148. doi: 10.1111/j.1467-6494.1986.tb00391.x

Examples

# data generation from '?prcomp', slightly modified

C <- chol(S <- toeplitz(0.9^(0:15)))

set.seed(17)

X <- matrix(rnorm(1600), 100, 16)

Z <- X %*% C

pca <- parameters::principal_components(

as.data.frame(Z),

rotation = "varimax",

n = 3

)

pca

check_itemscale(pca)

# as data frame

check_itemscale(

as.data.frame(Z),

factor_index = parameters::closest_component(pca)

)

Visual check of model assumptions

Description

Visual check of various model assumptions (normality of residuals, normality of random effects, linear relationship, homogeneity of variance, multicollinearity).

If check_model() doesn't work as expected, try setting verbose = TRUE to

get hints about possible problems.

Usage

check_model(x, ...)

## Default S3 method:

check_model(

x,

panel = TRUE,

check = "all",

detrend = TRUE,

bandwidth = "nrd",

type = "density",

residual_type = NULL,

show_dots = NULL,

size_dot = 2,

size_line = 0.8,

size_title = 12,

size_axis_title = base_size,

base_size = 10,

alpha = 0.2,

alpha_dot = 0.8,

colors = c("#3aaf85", "#1b6ca8", "#cd201f"),

theme = "see::theme_lucid",

verbose = FALSE,

...

)

Arguments

x |

A model object. |

... |

Arguments passed down to the individual check functions, especially

to |

panel |

Logical, if |

check |

Character vector, indicating which checks for should be performed

and plotted. May be one or more of |

detrend |

Logical. Should Q-Q/P-P plots be detrended? Defaults to

|

bandwidth |

A character string indicating the smoothing bandwidth to

be used. Unlike |

type |

Plot type for the posterior predictive checks plot. Can be |

residual_type |

Character, indicating the type of residuals to be used.

For non-Gaussian models, the default is |

show_dots |

Logical, if |

size_dot, size_line |

Size of line and dot-geoms. |

base_size, size_title, size_axis_title |

Base font size for axis and plot titles. |

alpha, alpha_dot |

The alpha level of the confidence bands and dot-geoms. Scalar from 0 to 1. |

colors |

Character vector with color codes (hex-format). Must be of length 3. First color is usually used for reference lines, second color for dots, and third color for outliers or extreme values. |

theme |

String, indicating the name of the plot-theme. Must be in the

format |

verbose |

If |

Details

For Bayesian models from packages rstanarm or brms,

models will be "converted" to their frequentist counterpart, using

bayestestR::bayesian_as_frequentist.

A more advanced model-check for Bayesian models will be implemented at a

later stage.

See also the related vignette.

Value

The data frame that is used for plotting.

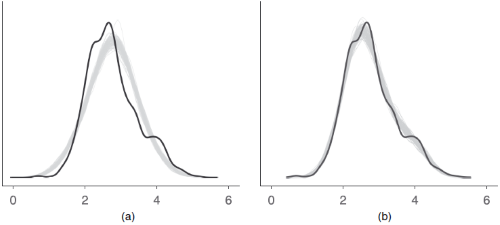

Posterior Predictive Checks

Posterior predictive checks can be used to look for systematic discrepancies

between real and simulated data. It helps to see whether the type of model

(distributional family) fits well to the data. See check_predictions()

for further details.

Linearity Assumption

The plot Linearity checks the assumption of linear relationship.

However, the spread of dots also indicate possible heteroscedasticity (i.e.

non-constant variance, hence, the alias "ncv" for this plot), thus it shows

if residuals have non-linear patterns. This plot helps to see whether

predictors may have a non-linear relationship with the outcome, in which case

the reference line may roughly indicate that relationship. A straight and

horizontal line indicates that the model specification seems to be ok. But

for instance, if the line would be U-shaped, some of the predictors probably

should better be modeled as quadratic term. See check_heteroscedasticity()

for further details.

Some caution is needed when interpreting these plots. Although these plots are helpful to check model assumptions, they do not necessarily indicate so-called "lack of fit", e.g. missed non-linear relationships or interactions. Thus, it is always recommended to also look at effect plots, including partial residuals.

Homogeneity of Variance

This plot checks the assumption of equal variance (homoscedasticity). The desired pattern would be that dots spread equally above and below a straight, horizontal line and show no apparent deviation.

Influential Observations

This plot is used to identify influential observations. If any points in this

plot fall outside of Cook’s distance (the dashed lines) then it is considered

an influential observation. See check_outliers() for further details.

Multicollinearity

This plot checks for potential collinearity among predictors. In a nutshell,

multicollinearity means that once you know the effect of one predictor, the

value of knowing the other predictor is rather low. Multicollinearity might

arise when a third, unobserved variable has a causal effect on each of the

two predictors that are associated with the outcome. In such cases, the actual

relationship that matters would be the association between the unobserved

variable and the outcome. See check_collinearity() for further details.

Normality of Residuals

This plot is used to determine if the residuals of the regression model are

normally distributed. Usually, dots should fall along the line. If there is

some deviation (mostly at the tails), this indicates that the model doesn't

predict the outcome well for that range that shows larger deviations from

the line. For generalized linear models and when residual_type = "normal",

a half-normal Q-Q plot of the absolute value of the standardized deviance

residuals is shown, however, the interpretation of the plot remains the same.

See check_normality() for further details. Usually, for generalized linear

(mixed) models, a test comparing simulated quantile residuals against the

uniform distribution is conducted (see next section).

Distribution of Simulated Quantile Residuals

Fore non-Gaussian models, when residual_type = "simulated" (the default

for generalized linear (mixed) models), residuals are not expected to be

normally distributed. In this case, we generate simulated quantile residuals

to compare whether observed response values deviate from model expectations.

Simulated quantile residuals are generated by simulating a series of

values from a fitted model for each case, comparing the observed response

values to these simulations, and computing the empirical quantile of the

observed value in the distribution of simulated values. When the model is

correctly-specified, these quantile residuals will follow a uniform (flat)

distribution. The Q-Q plot compares the simulated quantile residuals against

a uniform distribution. The plot is interpreted in the same way as for

a normal-distribution Q-Q plot in linear regression.

See simulate_residuals() and check_residuals() for further details.

Overdispersion

For count models, an overdispersion plot is shown. Overdispersion occurs

when the observed variance is higher than the variance of a theoretical model.

For Poisson models, variance increases with the mean and, therefore, variance

usually (roughly) equals the mean value. If the variance is much higher,

the data are "overdispersed". See check_overdispersion() for further

details.

Binned Residuals

For models from binomial families, a binned residuals plot is shown.

Binned residual plots are achieved by cutting the the data into bins and then

plotting the average residual versus the average fitted value for each bin.

If the model were true, one would expect about 95% of the residuals to fall

inside the error bounds. See binned_residuals() for further details.

Residuals for (Generalized) Linear Models

Plots that check the homogeneity of variance use standardized Pearson's

residuals for generalized linear models, and standardized residuals for

linear models. The plots for the normality of residuals (with overlayed

normal curve) and for the linearity assumption use the default residuals

for lm and glm (which are deviance residuals for glm). The Q-Q plots

use simulated quantile residuals (see simulate_residuals()) for

non-Gaussian models and standardized residuals for linear models.

Troubleshooting

For models with many observations, or for more complex models in general,

generating the plot might become very slow. One reason might be that the

underlying graphic engine becomes slow for plotting many data points. In

such cases, setting the argument show_dots = FALSE might help. Furthermore,

look at the check argument and see if some of the model checks could be

skipped, which also increases performance.

If check_model() doesn't work as expected, try setting verbose = TRUE to

get hints about possible problems.

Note

This function just prepares the data for plotting. To create the plots,

see needs to be installed. Furthermore, this function suppresses

all possible warnings. In case you observe suspicious plots, please refer

to the dedicated functions (like check_collinearity(),

check_normality() etc.) to get informative messages and warnings.

See Also

Other functions to check model assumptions and and assess model quality:

check_autocorrelation(),

check_collinearity(),

check_convergence(),

check_heteroscedasticity(),

check_homogeneity(),

check_outliers(),

check_overdispersion(),

check_predictions(),

check_singularity(),

check_zeroinflation()

Examples

m <- lm(mpg ~ wt + cyl + gear + disp, data = mtcars)

check_model(m)

data(sleepstudy, package = "lme4")

m <- lme4::lmer(Reaction ~ Days + (Days | Subject), sleepstudy)

check_model(m, panel = FALSE)

Check if a distribution is unimodal or multimodal

Description

For univariate distributions (one-dimensional vectors), this functions performs a Ameijeiras-Alonso et al. (2018) excess mass test. For multivariate distributions (data frames), it uses mixture modelling. However, it seems that it always returns a significant result (suggesting that the distribution is multimodal). A better method might be needed here.

Usage

check_multimodal(x, ...)

Arguments

x |

A numeric vector or a data frame. |

... |

Arguments passed to or from other methods. |

References

Ameijeiras-Alonso, J., Crujeiras, R. M., and Rodríguez-Casal, A. (2019). Mode testing, critical bandwidth and excess mass. Test, 28(3), 900-919.

Examples

# Univariate

x <- rnorm(1000)

check_multimodal(x)

x <- c(rnorm(1000), rnorm(1000, 2))

check_multimodal(x)

# Multivariate

m <- data.frame(

x = rnorm(200),

y = rbeta(200, 2, 1)

)

plot(m$x, m$y)

check_multimodal(m)

m <- data.frame(

x = c(rnorm(100), rnorm(100, 4)),

y = c(rbeta(100, 2, 1), rbeta(100, 1, 4))

)

plot(m$x, m$y)

check_multimodal(m)

Check model for (non-)normality of residuals.

Description

Check model for (non-)normality of residuals.

Usage

check_normality(x, ...)

## S3 method for class 'merMod'

check_normality(x, effects = "fixed", ...)

Arguments

x |

A model object. |

... |

Currently not used. |

effects |

Should normality for residuals ( |

Details

check_normality() calls stats::shapiro.test and checks the

standardized residuals (or studentized residuals for mixed models) for

normal distribution. Note that this formal test almost always yields

significant results for the distribution of residuals and visual inspection

(e.g. Q-Q plots) are preferable. For generalized linear models, no formal

statistical test is carried out. Rather, there's only a plot() method for

GLMs. This plot shows a half-normal Q-Q plot of the absolute value of the

standardized deviance residuals is shown (in line with changes in

plot.lm() for R 4.3+).

Value

The p-value of the test statistics. A p-value < 0.05 indicates a significant deviation from normal distribution.

Note

For mixed-effects models, studentized residuals, and not

standardized residuals, are used for the test. There is also a

plot()-method

implemented in the see-package.

See Also

see::plot.see_check_normality() for options to customize the plot.

Examples

m <<- lm(mpg ~ wt + cyl + gear + disp, data = mtcars)

check_normality(m)

# plot results

x <- check_normality(m)

plot(x)

# QQ-plot

plot(check_normality(m), type = "qq")

# PP-plot

plot(check_normality(m), type = "pp")

Outliers detection (check for influential observations)

Description

Checks for and locates influential observations (i.e., "outliers") via several distance and/or clustering methods. If several methods are selected, the returned "Outlier" vector will be a composite outlier score, made of the average of the binary (0 or 1) results of each method. It represents the probability of each observation of being classified as an outlier by at least one method. The decision rule used by default is to classify as outliers observations which composite outlier score is superior or equal to 0.5 (i.e., that were classified as outliers by at least half of the methods). See the Details section below for a description of the methods.

Usage

check_outliers(x, ...)

## Default S3 method:

check_outliers(

x,

method = c("cook", "pareto"),

threshold = NULL,

ID = NULL,

verbose = TRUE,

...

)

## S3 method for class 'numeric'

check_outliers(x, method = "zscore_robust", threshold = NULL, ...)

## S3 method for class 'data.frame'

check_outliers(x, method = "mahalanobis", threshold = NULL, ID = NULL, ...)

## S3 method for class 'performance_simres'

check_outliers(

x,

type = "default",

iterations = 100,

alternative = "two.sided",

...

)

Arguments

x |

A model, a data.frame, a |

... |

When |

method |

The outlier detection method(s). Can be |

threshold |

A list containing the threshold values for each method (e.g.

|

ID |

Optional, to report an ID column along with the row number. |

verbose |

Toggle warnings. |

type |

Type of method to test for outliers. Can be one of |

iterations |

Number of simulations to run. |

alternative |

A character string specifying the alternative hypothesis.

Can be one of |

Details

Outliers can be defined as particularly influential observations. Most methods rely on the computation of some distance metric, and the observations greater than a certain threshold are considered outliers. Importantly, outliers detection methods are meant to provide information to consider for the researcher, rather than to be an automatized procedure which mindless application is a substitute for thinking.

An example sentence for reporting the usage of the composite method could be:

"Based on a composite outlier score (see the 'check_outliers' function in the 'performance' R package; Lüdecke et al., 2021) obtained via the joint application of multiple outliers detection algorithms (Z-scores, Iglewicz, 1993; Interquartile range (IQR); Mahalanobis distance, Cabana, 2019; Robust Mahalanobis distance, Gnanadesikan and Kettenring, 1972; Minimum Covariance Determinant, Leys et al., 2018; Invariant Coordinate Selection, Archimbaud et al., 2018; OPTICS, Ankerst et al., 1999; Isolation Forest, Liu et al. 2008; and Local Outlier Factor, Breunig et al., 2000), we excluded n participants that were classified as outliers by at least half of the methods used."

Value

A logical vector of the detected outliers with a nice printing

method: a check (message) on whether outliers were detected or not. The

information on the distance measure and whether or not an observation is

considered as outlier can be recovered with the as.data.frame function.

Note that the function will (silently) return a vector of FALSE for

non-supported data types such as character strings.

Model-specific methods

-

Cook's Distance: Among outlier detection methods, Cook's distance and leverage are less common than the basic Mahalanobis distance, but still used. Cook's distance estimates the variations in regression coefficients after removing each observation, one by one (Cook, 1977). Since Cook's distance is in the metric of an F distribution with p and n-p degrees of freedom, the median point of the quantile distribution can be used as a cut-off (Bollen, 1985). A common approximation or heuristic is to use 4 divided by the numbers of observations, which usually corresponds to a lower threshold (i.e., more outliers are detected). This only works for frequentist models. For Bayesian models, see

pareto. -

Pareto: The reliability and approximate convergence of Bayesian models can be assessed using the estimates for the shape parameter k of the generalized Pareto distribution. If the estimated tail shape parameter k exceeds 0.5, the user should be warned, although in practice the authors of the loo package observed good performance for values of k up to 0.7 (the default threshold used by

performance).

Univariate methods

-

Z-scores

("zscore", "zscore_robust"): The Z-score, or standard score, is a way of describing a data point as deviance from a central value, in terms of standard deviations from the mean ("zscore") or, as it is here the case ("zscore_robust") by default (Iglewicz, 1993), in terms of Median Absolute Deviation (MAD) from the median (which are robust measures of dispersion and centrality). The default threshold to classify outliers is 1.959 (threshold = list("zscore" = 1.959)), corresponding to the 2.5% (qnorm(0.975)) most extreme observations (assuming the data is normally distributed). Importantly, the Z-score method is univariate: it is computed column by column. If a data frame is passed, the Z-score is calculated for each variable separately, and the maximum (absolute) Z-score is kept for each observations. Thus, all observations that are extreme on at least one variable might be detected as outliers. Thus, this method is not suited for high dimensional data (with many columns), returning too liberal results (detecting many outliers). -

IQR

("iqr"): Using the IQR (interquartile range) is a robust method developed by John Tukey, which often appears in box-and-whisker plots (e.g., in ggplot2::geom_boxplot). The interquartile range is the range between the first and the third quartiles. Tukey considered as outliers any data point that fell outside of either 1.5 times (the default threshold is 1.7) the IQR below the first or above the third quartile. Similar to the Z-score method, this is a univariate method for outliers detection, returning outliers detected for at least one column, and might thus not be suited to high dimensional data. The distance score for the IQR is the absolute deviation from the median of the upper and lower IQR thresholds. Then, this value is divided by the IQR threshold, to “standardize” it and facilitate interpretation. -

CI

("ci", "eti", "hdi", "bci"): Another univariate method is to compute, for each variable, some sort of "confidence" interval and consider as outliers values lying beyond the edges of that interval. By default,"ci"computes the Equal-Tailed Interval ("eti"), but other types of intervals are available, such as Highest Density Interval ("hdi") or the Bias Corrected and Accelerated Interval ("bci"). The default threshold is0.95, considering as outliers all observations that are outside the 95% CI on any of the variable. SeebayestestR::ci()for more details about the intervals. The distance score for the CI methods is the absolute deviation from the median of the upper and lower CI thresholds. Then, this value is divided by the difference between the upper and lower CI bounds divided by two, to “standardize” it and facilitate interpretation.

Multivariate methods

-

Mahalanobis Distance: Mahalanobis distance (Mahalanobis, 1930) is often used for multivariate outliers detection as this distance takes into account the shape of the observations. The default

thresholdis often arbitrarily set to some deviation (in terms of SD or MAD) from the mean (or median) of the Mahalanobis distance. However, as the Mahalanobis distance can be approximated by a Chi squared distribution (Rousseeuw and Van Zomeren, 1990), we can use the alpha quantile of the chi-square distribution with k degrees of freedom (k being the number of columns). By default, the alpha threshold is set to 0.025 (corresponding to the 2.5\ Cabana, 2019). This criterion is a natural extension of the median plus or minus a coefficient times the MAD method (Leys et al., 2013). -

Robust Mahalanobis Distance: A robust version of Mahalanobis distance using an Orthogonalized Gnanadesikan-Kettenring pairwise estimator (Gnanadesikan and Kettenring, 1972). Requires the bigutilsr package. See the

bigutilsr::dist_ogk()function. -

Minimum Covariance Determinant (MCD): Another robust version of Mahalanobis. Leys et al. (2018) argue that Mahalanobis Distance is not a robust way to determine outliers, as it uses the means and covariances of all the data - including the outliers - to determine individual difference scores. Minimum Covariance Determinant calculates the mean and covariance matrix based on the most central subset of the data (by default, 66\ is deemed to be a more robust method of identifying and removing outliers than regular Mahalanobis distance. This method has a

percentage_centralargument that allows specifying the breakdown point (0.75, the default, is recommended by Leys et al. 2018, but a commonly used alternative is 0.50). -

Invariant Coordinate Selection (ICS): The outlier are detected using ICS, which by default uses an alpha threshold of 0.025 (corresponding to the 2.5\ value for outliers classification. Refer to the help-file of

ICSOutlier::ics.outlier()to get more details about this procedure. Note thatmethod = "ics"requires both ICS and ICSOutlier to be installed, and that it takes some time to compute the results. You can speed up computation time using parallel computing. Set the number of cores to use withoptions(mc.cores = 4)(for example). -

OPTICS: The Ordering Points To Identify the Clustering Structure (OPTICS) algorithm (Ankerst et al., 1999) is using similar concepts to DBSCAN (an unsupervised clustering technique that can be used for outliers detection). The threshold argument is passed as

minPts, which corresponds to the minimum size of a cluster. By default, this size is set at 2 times the number of columns (Sander et al., 1998). Compared to the other techniques, that will always detect several outliers (as these are usually defined as a percentage of extreme values), this algorithm functions in a different manner and won't always detect outliers. Note thatmethod = "optics"requires the dbscan package to be installed, and that it takes some time to compute the results. Additionally, theoptics_xi(default to 0.05) is passed to thedbscan::extractXi()function to further refine the cluster selection. -

Local Outlier Factor: Based on a K nearest neighbors algorithm, LOF compares the local density of a point to the local densities of its neighbors instead of computing a distance from the center (Breunig et al., 2000). Points that have a substantially lower density than their neighbors are considered outliers. A LOF score of approximately 1 indicates that density around the point is comparable to its neighbors. Scores significantly larger than 1 indicate outliers. The default threshold of 0.025 will classify as outliers the observations located at

qnorm(1-0.025) * SD)of the log-transformed LOF distance. Requires the dbscan package.

Methods for simulated residuals

The approach for detecting outliers based on simulated residuals differs

from the traditional methods and may not be detecting outliers as expected.

Literally, this approach compares observed to simulated values. However, we

do not know the deviation of the observed data to the model expectation, and

thus, the term "outlier" should be taken with a grain of salt. It refers to

"simulation outliers". Basically, the comparison tests whether on observed

data point is outside the simulated range. It is strongly recommended to read

the related documentations in the DHARMa package, e.g. ?DHARMa::testOutliers.

Threshold specification

Default thresholds are currently specified as follows:

list( zscore = stats::qnorm(p = 1 - 0.001 / 2), zscore_robust = stats::qnorm(p = 1 - 0.001 / 2), iqr = 1.7, ci = 1 - 0.001, eti = 1 - 0.001, hdi = 1 - 0.001, bci = 1 - 0.001, cook = stats::qf(0.5, ncol(x), nrow(x) - ncol(x)), pareto = 0.7, mahalanobis = stats::qchisq(p = 1 - 0.001, df = ncol(x)), mahalanobis_robust = stats::qchisq(p = 1 - 0.001, df = ncol(x)), mcd = stats::qchisq(p = 1 - 0.001, df = ncol(x)), ics = 0.001, optics = 2 * ncol(x), optics_xi = 0.05, lof = 0.001 )

Meta-analysis models

For meta-analysis models (e.g. objects of class rma from the metafor

package or metagen from package meta), studies are defined as outliers

when their confidence interval lies outside the confidence interval of the

pooled effect.

Note

There is also a

plot()-method

implemented in the see package.

Please note that the range of the distance-values along the y-axis is

re-scaled to range from 0 to 1.

References

Archimbaud, A., Nordhausen, K., and Ruiz-Gazen, A. (2018). ICS for multivariate outlier detection with application to quality control. Computational Statistics and Data Analysis, 128, 184-199. doi:10.1016/j.csda.2018.06.011

Gnanadesikan, R., and Kettenring, J. R. (1972). Robust estimates, residuals, and outlier detection with multiresponse data. Biometrics, 81-124.

Bollen, K. A., and Jackman, R. W. (1985). Regression diagnostics: An expository treatment of outliers and influential cases. Sociological Methods and Research, 13(4), 510-542.

Cabana, E., Lillo, R. E., and Laniado, H. (2019). Multivariate outlier detection based on a robust Mahalanobis distance with shrinkage estimators. arXiv preprint arXiv:1904.02596.

Cook, R. D. (1977). Detection of influential observation in linear regression. Technometrics, 19(1), 15-18.

Iglewicz, B., and Hoaglin, D. C. (1993). How to detect and handle outliers (Vol. 16). Asq Press.

Leys, C., Klein, O., Dominicy, Y., and Ley, C. (2018). Detecting multivariate outliers: Use a robust variant of Mahalanobis distance. Journal of Experimental Social Psychology, 74, 150-156.

Liu, F. T., Ting, K. M., and Zhou, Z. H. (2008, December). Isolation forest. In 2008 Eighth IEEE International Conference on Data Mining (pp. 413-422). IEEE.

Lüdecke, D., Ben-Shachar, M. S., Patil, I., Waggoner, P., and Makowski, D. (2021). performance: An R package for assessment, comparison and testing of statistical models. Journal of Open Source Software, 6(60), 3139. doi:10.21105/joss.03139

Thériault, R., Ben-Shachar, M. S., Patil, I., Lüdecke, D., Wiernik, B. M., and Makowski, D. (2023). Check your outliers! An introduction to identifying statistical outliers in R with easystats. Behavior Research Methods, 1-11. doi:10.3758/s13428-024-02356-w

Rousseeuw, P. J., and Van Zomeren, B. C. (1990). Unmasking multivariate outliers and leverage points. Journal of the American Statistical association, 85(411), 633-639.

See Also

see::plot.see_check_outliers() for options to customize the plot.

Other functions to check model assumptions and and assess model quality:

check_autocorrelation(),

check_collinearity(),

check_convergence(),

check_heteroscedasticity(),

check_homogeneity(),

check_model(),

check_overdispersion(),

check_predictions(),

check_singularity(),

check_zeroinflation()

Examples

data <- mtcars # Size nrow(data) = 32

# For single variables ------------------------------------------------------

# Find all observations beyond +/- 2 SD

outliers_list <- check_outliers(data$mpg, method = "zscore", threshold = 2)

outliers_list # Show the row index of the outliers

as.numeric(outliers_list) # The object is a binary vector...

filtered_data <- data[!outliers_list, ] # And can be used to filter a data frame

nrow(filtered_data) # New size, 30 (2 outliers removed)

# For dataframes ------------------------------------------------------

check_outliers(data, threshold = 2) # It works the same way on data frames

# You can also use multiple methods at once

outliers_list <- check_outliers(data, method = c(

"mahalanobis",

"iqr",

"zscore"

))

outliers_list

# Using `as.data.frame()`, we can access more details!

outliers_info <- as.data.frame(outliers_list)

head(outliers_info)

outliers_info$Outlier # Including the probability of being an outlier

# And we can be more stringent in our outliers removal process

filtered_data <- data[outliers_info$Outlier < 0.1, ]

# We can run the function stratified by groups using `{datawizard}` package:

group_iris <- datawizard::data_group(iris, "Species")

check_outliers(group_iris)

# nolint start

# nolint end

# You can also run all the methods

check_outliers(data, method = "all", verbose = FALSE)